The Current Wave

OpenAI follows a very particular strategy when it comes to scheduling their big announcements: they take a look at Google’s event calendar and schedule their own the day before. This isn't the first time this has happened, and it probably won’t be the last.

Both companies announced significant updates, some of them live, and some coming soon. In this issue, I cover OpenAI and will save Google for the next one.

GPT-4o: it’s Her

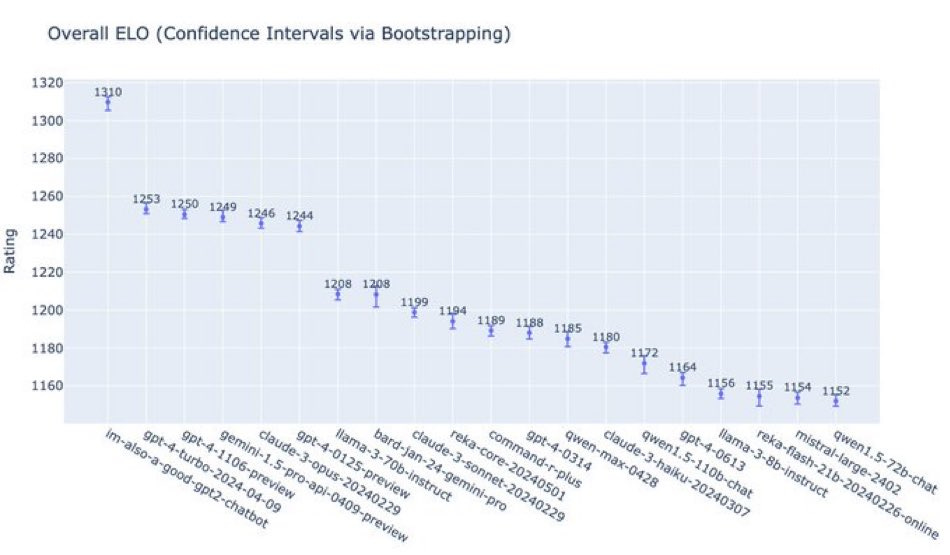

OpenAI’s new model, GPT-4o, has been out in the wild for a couple of weeks. It is the mysterious “im-also-a-good-gpt2-chatbot” model that dominated the LMSYS leaderboard:

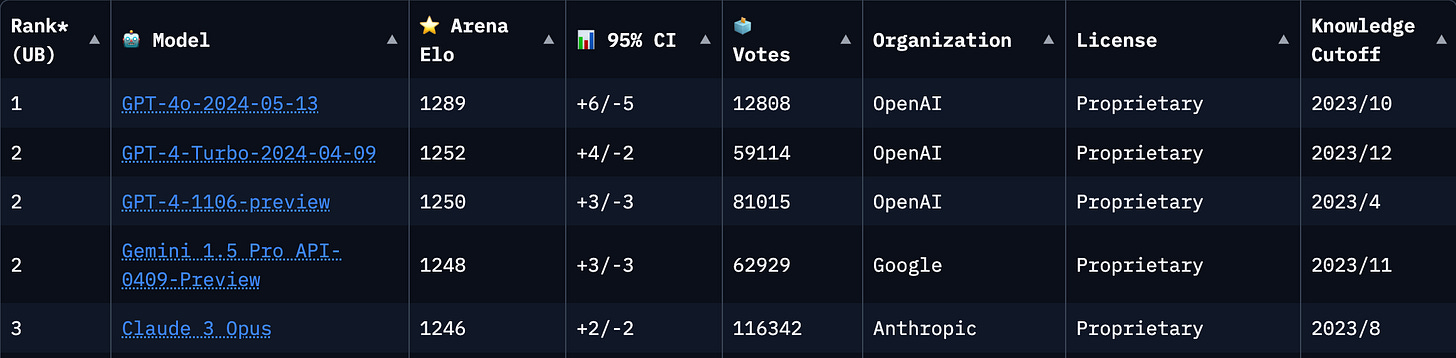

GPT-4o is impressive but not this impressive. The teaser did its job: people sometimes picked the good-gpt2-chatbot just because of the hype. Now we have a more realistic picture of the model’s performance:

This is still an incredibly strong update — even stronger than what the leaderboard might suggest. The LMSYS Chatbot Arena is text-based; GPT-4o’s magic, however, is in the “o” for “omni”.

GPT-4o is a natively multimodal LLM, capable of working with voice, images, and video. I won’t discuss the demo in detail — there are dozens of other newsletters for that, and you should watch the live stream replay anyway (it’s really worth it, only 26 minutes).

I am much more interested in the bigger picture.

GPT-4o is the beginning of a new era

The release of GPT-4o is transformative in two ways.

First, the voice interface is phenomenal, enabling natural, real-time conversations. You can interrupt the AI, interject, ask follow-up questions, all in a natural flow with almost zero latency. It feels like the movie Her and transforms the experience of talking to an AI. OpenAI was able to achieve this by incorporating voice into GPT-4o instead of relying on Whisper for audio input/output (the details are unknown because OpenAI is not especially… open). Even crazier, the model can recognize and express emotions through voice just as well as a human does.

This marks the dawn of a new era.

Typing is not something humans naturally do. It is a terribly inefficient, low-bandwidth form of communication, and we use it only because we don’t have anything better. Companies have tried hard to develop “voice assistants,” most of them embarrassingly bad (Siri, Bixby, Cortana). But even the better ones like Google Assistant or Alexa look like a joke compared to GPT-4o. They are not even in the same league. It’s the Large Hadron Collider vs. a pocket calculator. It’s Real Madrid vs. Nowheresville-on-the-Boondocks.

With GPT-4o, for the first time in the history of computing (not AI, computing!), voice might become the primary user interface.

Note that the new voice mode is not available yet, but it will be rolled out in the next few weeks.

The second transformative effect of GPT-4o has nothing to do with the model's capabilities. It’s about pricing and accessibility.

GPT-4o will replace the aging GPT-3.5 model in the free tier of ChatGPT. GPT-3.5 is an old model with a knowledge cutoff date of September 2021, no online browsing capabilities, and no code interpreter in the free version. Yet, this is the model most people have hands-on experience with because it costs nothing — meaning that most people have no idea how good AI has become in the last year.

This also means a complete reset of the competitive landscape. Why should anyone pay $20 a month for Claude, Gemini, Copilot Pro, or any other service when they can access a much better model for free? (Why should anyone pay for ChatGPT Plus, for that matter?)

It is a devastating move for competitors, but it is not entirely clear how OpenAI will pay for all the inference costs. Well, maybe it won’t be them paying… which leads us to our next topic.

The uneasy Apple partnership

We’ve also learned — not on the live stream, but roughly at the same time — that OpenAI signed a partnership with Apple: ChatGPT is coming to iOS 18. Apple has been talking to Google for a long time about a similar partnership for adding Gemini to iOS (I wrote about it in detail in AI Current #7), but those negotiations fell through.

Apple is an ideal partner for OpenAI with no material risks.

At a minimum, ChatGPT will be pre-installed as a default app on every new iPhone; but a tighter integration is also possible, replacing or augmenting Siri (it largely depends on how Apple is progressing with their own on-device LLM, which I covered in AI Current #17). We are talking about 1.5 billion devices worldwide—an incredible jump in OpenAI’s reach.

The partnership looks a little different from Apple’s perspective.

The truth is, Apple’s options are limited. They must add AI into iOS 18 or risk a collapse in sales, and they don’t have anything nearly good enough in-house. Apple can stay in the game with this partnership, but the position they find themselves in is anything but comfortable.

First, they won’t have any control over the direction, speed, and quality of ChatGPT. Outsourcing a key feature of your flagship product to a third party is about the most dangerous thing a company can do, especially on Apple’s scale.

Second, it is unlikely that OpenAI has the compute capacity for the inference needs of 1.5 billion users. Only Google has. So, Apple will need to invest very heavily into inference infrastructure. If you’ve ever wondered why are they developing their own AI server processors, now it’s all much more obvious.

Thanks for reading the AI Current!

I publish this newsletter twice a week on Tuesdays and Fridays at the same time on Substack and on LinkedIn.

Don’t be a stranger! Hit me with a LinkedIn DM or say hi in the Substack chat.

I work at Appen, one of the world’s best AI training data companies. However, I don’t speak for Appen, and nothing in this newsletter represents the views of the company.